There is a new concept in data architecture called a data lakehouse that combines the features of data lakes and data warehouses. By providing a more integrated and scalable solution for managing and analyzing data, data lakehouses are a modern attempt to address the limitations of both traditional data warehouses and data lakes.

What is a Data Lakehouse?

So, let’s dive deeper into exactly what is a data lakehouse. A data lakehouse is a data management framework used to extract and move data in and out of a designated server or cloud service. It does this by effectively combining and building on two very different architectures: data lakes and data warehouses.

Traditionally, organizations have used both data lakes and data warehouses side by side. Structured data would pass through the warehouse before landing in the data lake for further analysis. But this setup comes with its own set of challenges, like extra infrastructure costs and redundant data storage, which can be quite inefficient.. Data lakehouses streamline this process through the use of layers, allowing both data filtering akin to a warehouse and low-cost storage similar to a data lake.

Advantages and Significance of Data Lakehouse

There are significant benefits to using a lakehouse architecture instead of a data warehouse or data lake. This system combines both structures into one, creating a middle ground when it comes to data management significantly reducing maintenance and infrastructure costs. With this decreased cost comes the biggest advantage of data lakehouses — flexibility.

Lakehouses are flexible about what data goes in and out and can be used for all sorts of purposes that require moving data quickly, such as business intelligence, data streaming, or machine learning. This flexibility is further evident in that lakehouses can be accessed through mainstream programming languages such as Python, Scala, or even others through APIs.

Key Components of a Data Lakehouse

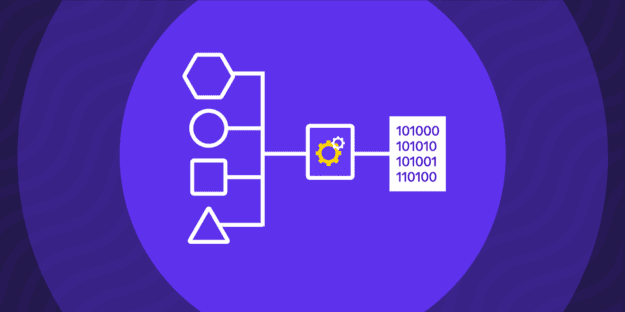

What separates data lakehouses from other types of data management frameworks is their use of layers when injecting and organizing data. Every layer serves a particular purpose and can be accessed by combining the appropriate tools to create a hybrid and efficient data retrieval and management system. Data lakehouse architecture consists of five layers:

- Ingestion layer: This part of the data lakehouse architecture is used for data extraction. This data can come from many sources, such as transactional, relational, and NoSQL databases, data streams, and APIs.

- Storage layer: The data is then stored to be further processed should the need arise. However, the person managing the database can pull data specifically from this layer.

- Metadata layer: A defining element of a data lakehouse, data coming to this layer can further be cached, zero-copy cloned, indexed, and extracted, be it through ACID transactions or other means. This layer allows for schema enforcing and declining any data that doesn’t fit, making it similar to the ETL process in warehouses.

- API layer: It allows applications to interface with the lakehouse. Here lie various interfaces that allow the user to connect outside applications to the lakehouse to more efficiently query data.

- Data consumption layer: The user-friendly layer of the lakehouse includes tools and applications that communicate with the database using the above mentioned APIs, such as business analytics tools or machine learning frameworks.

Implementing Data Lakehouse in Your Business

Data ingestion is usually done through multiple sources and then processed further depending on the type of lakehouse. These can be connected to the lakehouse through an external user interface or application interface, allowing automatic data input. The process is slightly different depending on the method and software used, but it boils down to two general steps:

- First, the sources to be injected are chosen. These can be SQL databases, inputted files, SaaS applications, or other types of datasets that need to be uploaded. Usually, every source has a different connection protocol or API for this.

- Then comes the more complicated part of the ETL protocol – data transformation. This includes automapping fields, modifying the target schema, and resolving any conflicts that arise when porting the data into the lakehouse.

Conflicts can mainly arise through incomplete data or missing fields, requiring the schema to be modified upon entry. Once that’s been resolved and the integration has been successful, the ingested data moves on to the upper layers of the lakehouse to be further processed and analyzed.

Comparing Data Lakehouse with Traditional Approaches

Although defined as an amalgamation of a data lake and a data warehouse, the lakehouse architecture differs slightly from its predecessors, integrating both approaches into something new.

The data warehouse method is highly organized. The data going in must be of a certain make and structure, and on entry, it is sequestered into the correct denominator, by which it can be sorted later. This allows for a tight-knit dataset that does not offer any variety other than what is inputted. This allows for easier data extraction by users, but it limits the scope when using that data for statistics or machine learning.

A data lake on the other hand prioritizes storage over structure. The types of data that can be found in a data lake can be both unstructured and structured, allowing a wider variety of data contained in a set. This is beneficial to AI training as well as business analytics which need as much data as possible to make a more detailed result.

A lakehouse architecture layers these two approaches. The data ingestion and storage layers of a data lakehouse function like a data lake, while the metadata layer is effectively a warehouse down to the ETL protocol it applies to the ingested and stored data. A lakehouse differs from both because all the layers in it can be accessed, and data can be pulled from them. This offers a good balance of data diversity and structure.

Popular Data Lakehouse Tools

The most popular data lakehouse tool is Delta Lake. It’s an open-source software and table format that acts as a storage layer and allows users to build a lakehouse using said software as a base. This format builds on Apache Spark, another popular framework, and provides ACID transactions, schema evolution, and transactional consistency.

While not open source and operating under the Apache License, Apache Iceberg is a more high-performance open table format. Due to this close-knit license, it’s available to more data processing tools, offering a more bespoke experience. It is much simpler and friendlier to use, bringing the simplicity of SQL queries to big data.

Rivery offers an even easier-to-use and tailor-made experience, supporting over 200 different connectors, all with their already-integrated protocols. This software is also a closed system, but it almost completely eliminates the use of APIs in the protocol, resulting in high-performance and efficient data transfers.

Challenges and Considerations in Adopting Data Lakehouse

Data lakehouses are a very new framework, and many people who work in data management or even software development are still unfamiliar with it. This can delay the implementation of this technology and its eventual testing to iron out the bugs.

Data engineers are also wary of completely moving their databases to this new system, as new skills may need to be mastered to operate a data lakehouse effectively, and new protocols could potentially cause them to lose precious data.

Real-world Applications of Data Lakehouse

Even though it’s a relatively new technology, data lakehouses have found their way among businesses, the most prominent ones being software giants Amazon Redshift Spectrum and Databricks. This model has also found its way in less software-oriented spaces, one being Walgreens. Adopting a lakehouse framework for their database allowed the drug company to effectively distribute medicine and vaccines to different counties and states, cross-referencing vaccine availability with patient demand.

Future Trends in Data Lakehouse

The most prominent field data lakehouses are used in artificial intelligence, or rather, training AI generative models. These models require large datasets, which have historically been provided through data lakes for a wider variety of data.

In recent years, many security issues have come up in the field of generative AI models, shifting the focus away from raw, unprocessed data. Thus data lakehouses are set to completely replace data lakes, offering a balance between filtering data and its diversity. This has the intended positive side effect of increasing AI security by removing obscene or illegal data from the ingested dataset.

Conclusion

While a relatively new data management architecture, the data lakehouse is already proving itself as the leading framework in the data industry. Combining both the aspects of data warehouses and data lakes and building on them has made this open table format a force to be reckoned with when it comes to performance, scalability, and data extraction.

So, what is a data lakehouse? A very powerful tool regardless of where it’s used. Whether training a generative AI model or even just maintaining and analyzing a simple database, data lakehouses cater to a wide variety of business needs, making them a powerful tool to consider for your enterprise.

Minimize the firefighting. Maximize ROI on pipelines.