For years, Snowflake and Rivery have partnered to build high-performing organizational data frameworks for data-driven organizations. Just this past February, Rivery became a Premier Technology Partner with Snowflake, deepening our partnership to benefit our mutual customers. And now, we’re expanding our partnership once again.

Starting today, with Rivery’s inclusion in the Snowpark Accelerated program, developers can preview the full functionality of Snowpark directly inside Rivery. Developers can now leverage Snowpark in Rivery to use their preferred programming languages on Snowflake, and execute Scala code and Java UDFs in automated data workflows.

What is Snowpark?

Snowpark is a new developer experience that allows data analysts, data scientists, and data engineers to write and run their preferred programming languages on Snowflake. Snowflake has always supported SQL natively. But with Snowpark, developers can now also code in other languages such as Scala, and execute Java UDFs on Snowflake, via an easily-accessible DataFrame model.

Snowpark expands data programmability and operationalizes data pipelines at scale more efficiently, all from Snowflake’s single, integrated platform. With Snowpark, developers can create and manage more workflows entirely within Snowflake’s Data Cloud, without the need to manage additional processing systems.

Snowpark opens up a new world of possibilities for data teams. Now developers can use custom programming languages to execute workloads such as ETL/ELT, data preparation, and feature engineering on Snowflake integration. The scalability, performance, security, and near-zero maintenance of Snowflake have never been easier to access.

Unlock New Dimensions of Your Data with Rivery’s Snowpark Integration

Many of our customers, including Bayer, Preqin, and Entravision, rely on Snowflake as their cloud data platform. After ingesting data into Snowflake, our customers often perform SQL-based data transformations to clean, prepare, and format the data for business intelligence, ML/AI models, and critical decision-making. Now, with Snowpark, Rivery customers can execute code in Scala and Java (coming soon), and Java UDFs – not just SQL – on Snowflake to perform data transformations.

Using Snowpark’s expanded programming capabilities, our customers can unlock new dimensions of their data. Now our customers can run SQL, Scala, and Java UDFs on Snowlake all in parallel, directly inside Rivery. This gives our customers unprecedented control over their data, and empowers them to extract more valuable insights. Rivery’s Snowpark integration also simplifies data operations by centralizing data workflows, and eliminating superfluous data systems.

Rivery customers can also embed Snowpark’s functionality in automated data workflows. Rivery’s Logic Rivers combine data ingestion and data transformation into a single automated workflow. Now, Logic Rivers can ingest data from any data source, execute transformations via SQL, Scala, or Java UDFs, and initiate other logic steps including reverse ETL, within a pre-set automated flow. Logic Rivers, with the power of Snowpark, offer completely automatized data orchestration.

Implement Sentiment Analysis Using Rivery’s Snowpark Integration

In this section, we will show you an example of Rivery’s Snowpark integration in action. Here’s how to use Rivery’s Snowpark integration to create an automated “sentiment analysis” workflow that:

1. Ingests Hubspot data into Snowflake

2. Leverages Snowpark to segment Hubspot tickets into three categories: “Positive,” “Negative,” or “Neutral.”

3. Pushes this sentiment analysis data back into Hubspot for line-of-business users (customer service, sales, etc.).

This sentiment analysis workflow harnesses user-provided code in a JAR file, custom logics, and Rivery’s reverse ETL capabilities. Here’s a step-by-step guide on how to set up the sentiment analysis workflow in Rivery.

Sentiment Analysis Workflow: Step-by-Step Guide

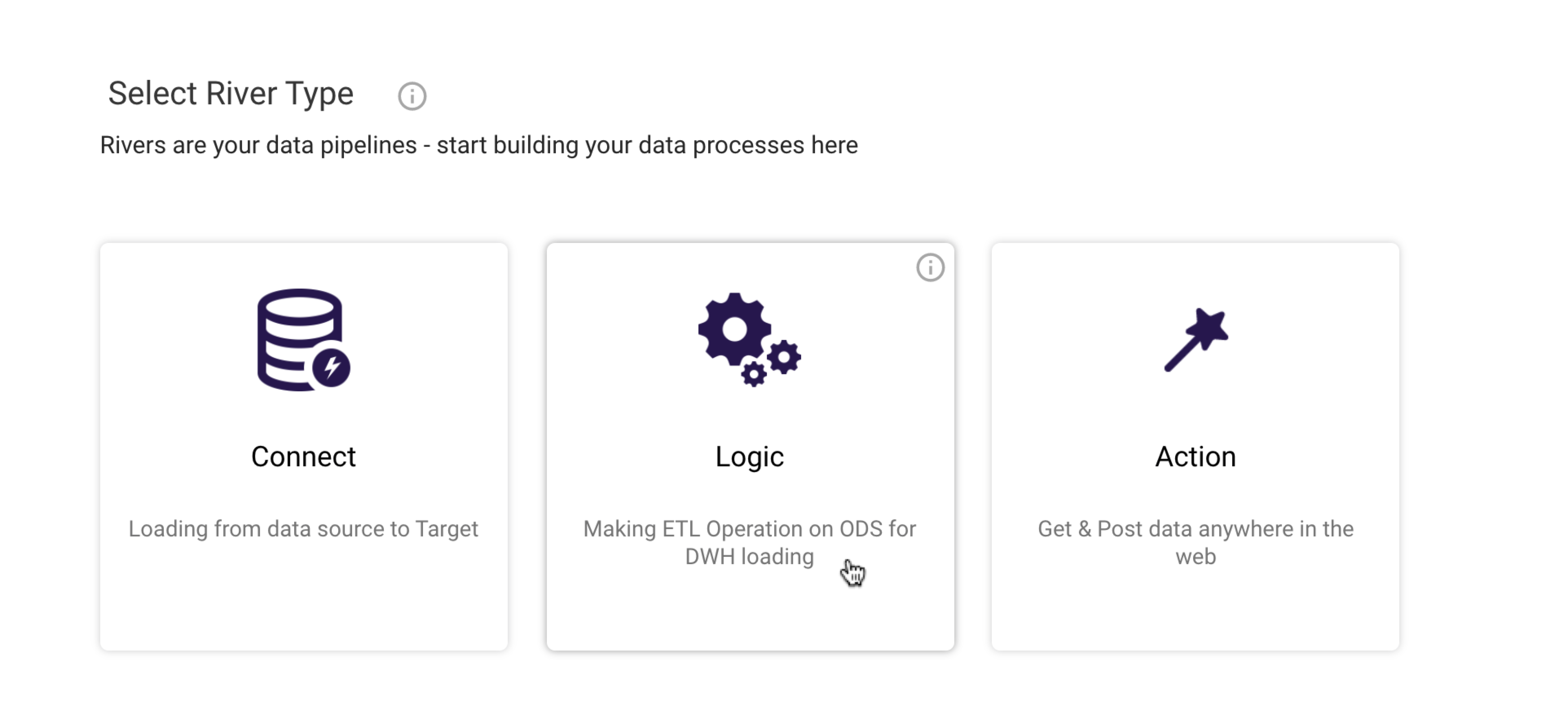

1. Create a new Logic Rivery. Click ‘Create New River’ in the top right corner of the screen. Then click ‘Logic’ under ‘River Type’.

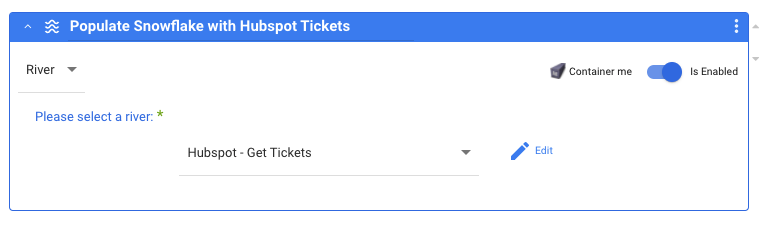

2. Add a new Logic Step. Classify this Step as a River. Select the River that pulls your Hubspot tickets – in this example, Hubspot – Get Tickets. This River will load the Hubspot tickets data into a Snowflake table.

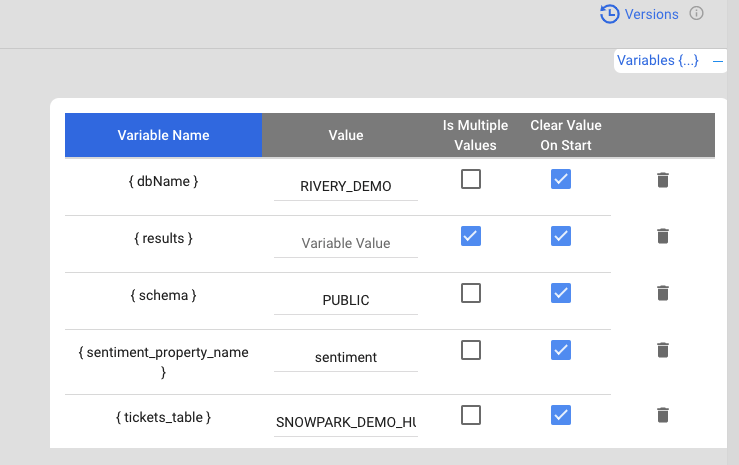

3. Click the Variables tab to the right of the Logic Rivers interface. Define variables and arguments based on the Hubspot tickets data pulled during Step 2, including:

- {dbName} = DB

- {results} = empty multi-value variable for Snowpark results

- {schema} = DB schema

- {sentiment_property_name} = attribute in Hubspot containing sentiment data

- {tickets_table} = table where tickets are stored

These logic variables and connection arguments can be passed into a JAR file for manipulation. This allows for reusability: leverage the same JAR file in multiple rivers by simply changing the Snowflake connection in each step.

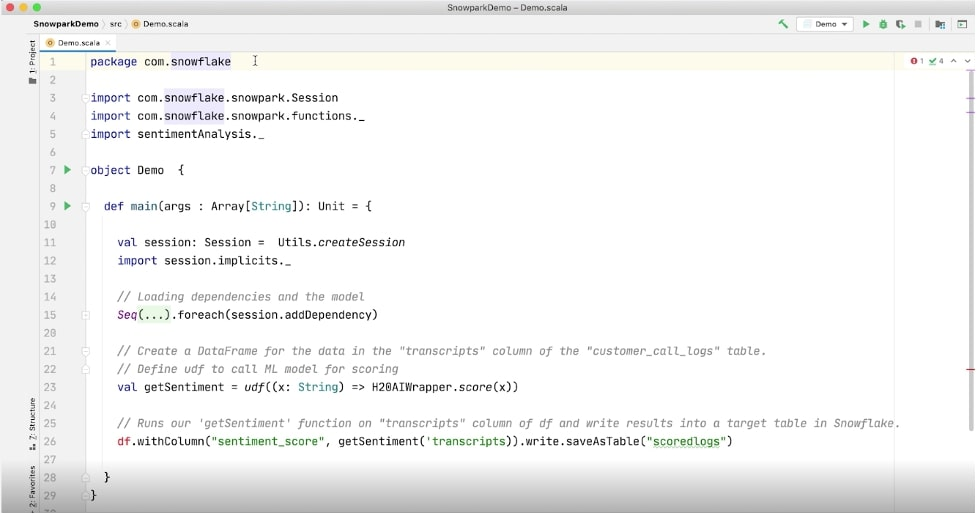

4. Next, we will perform sentiment analysis using a JAR file. The JAR file must be an executable ‘fatJar’ (or ‘uberJar’) that includes all of the necessary dependencies inside of it. The code itself is written in Scala – the JVM programming language currently supported by Snowpark. Here is the full code:

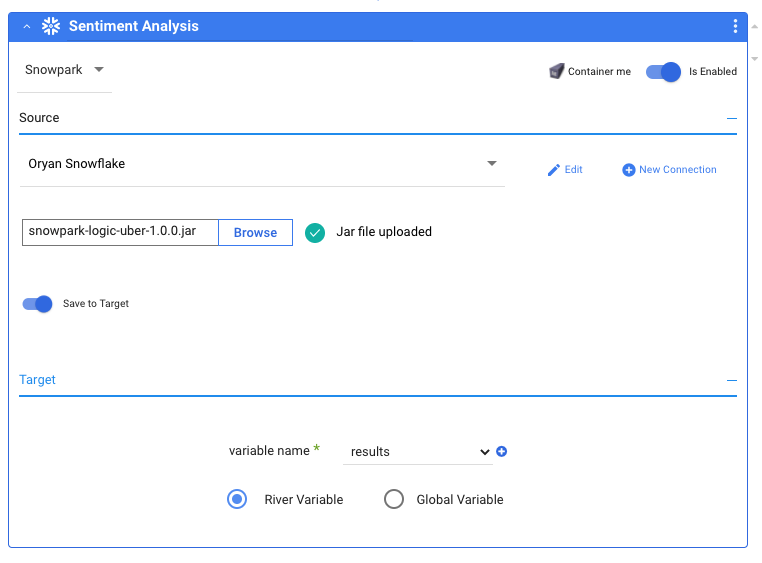

5. Click Add Logic Step. Select Snowpark from the dropdown in the top left corner. Under Source, choose your desired Snowflake connection. Click Browse and upload the JAR file containing the sentiment analysis code. At runtime, connection arguments will be passed into the JAR file.

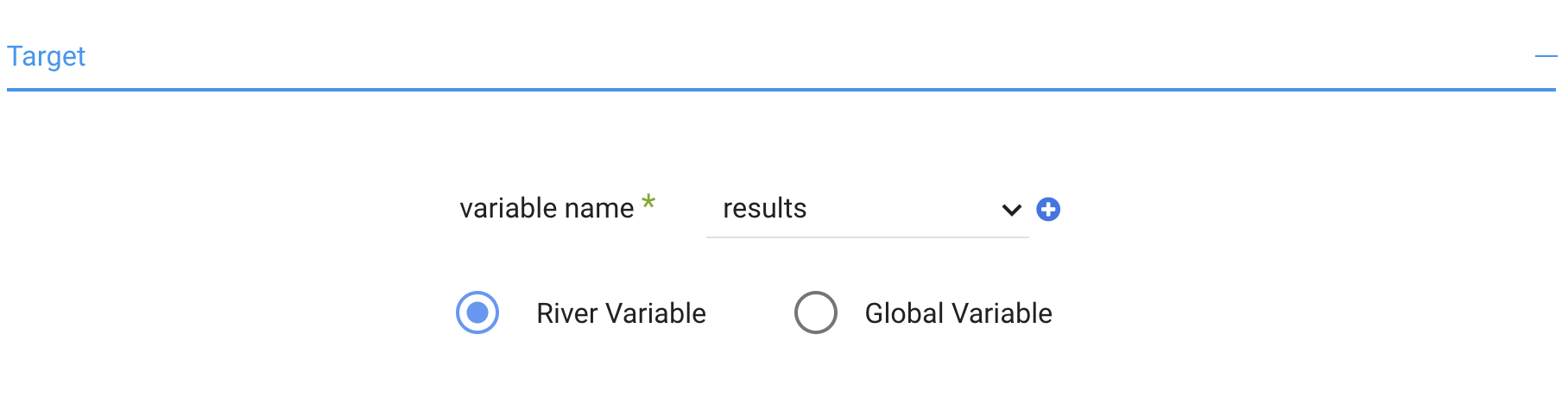

6. Store the values returned from the JAR inside a multi-value variable called ‘results’ by generating a results.csv file.

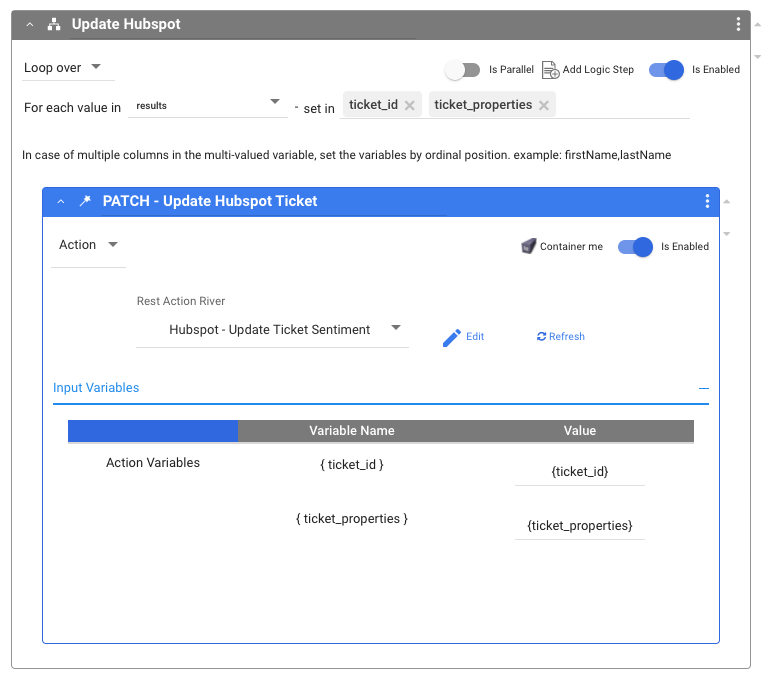

7. Finally, we push the ‘result’ for each ticket back into Hubspot via reverse ETL. The loop container executes an action pipeline to feed each result back to the corresponding ticket in Hubspot.

8. Voila – now we have sentiment analysis associated with each Hubspot ticket!

Try Rivery’s Snowpark Integration Now!

For years, Rivery and Snowflake have partnered to help our mutual customers win with data. Rivery’s Snowpark integration is just the latest entry in that partnership, and there’s certainly more to come in the near future. Today, Rivery’s Snowpark integration is now available to preview for all Rivery customers. Try the integration in Rivery now to bring your DataOps management to the next level!

For more information, read the full press release for Rivery’s Snowpark integration on Yahoo.

Minimize the firefighting. Maximize ROI on pipelines.